Warning

This pipeline is deprecated but it can still be used. However, we won't test the pipeline anymore and won't accept any changes to it. If you run into any issues, reinstall the last Diffusers version that supported this model.

Text2Video-Zero¶

Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators is by Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, Zhangyang Wang, Shant Navasardyan, Humphrey Shi.

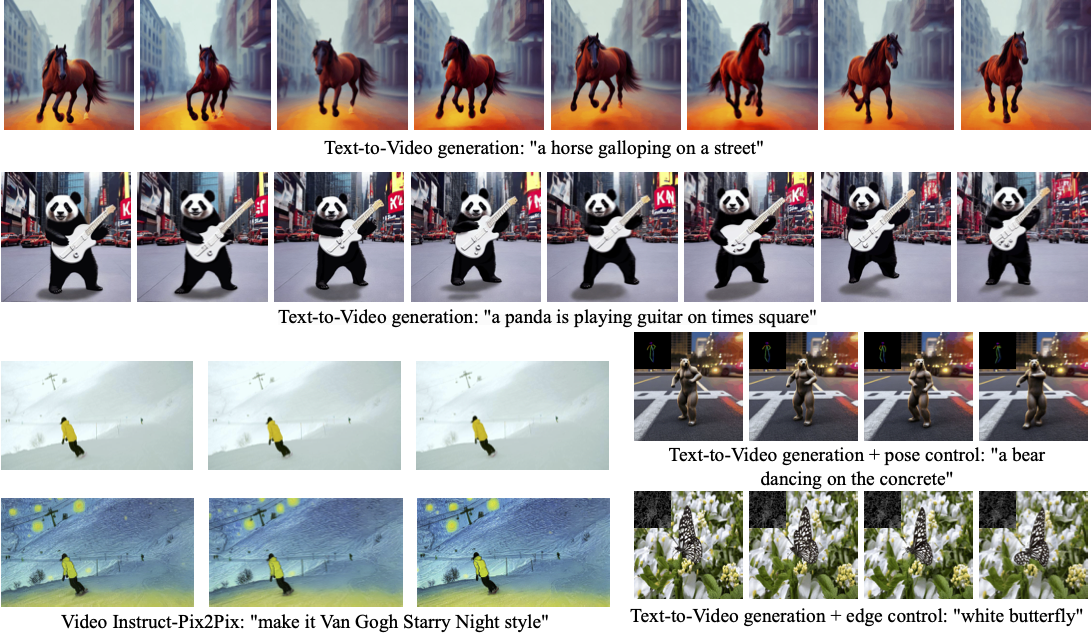

Text2Video-Zero enables zero-shot video generation using either: 1. A textual prompt 2. A prompt combined with guidance from poses or edges 3. Video Instruct-Pix2Pix (instruction-guided video editing)

Results are temporally consistent and closely follow the guidance and textual prompts.

The abstract from the paper is:

Recent text-to-video generation approaches rely on computationally heavy training and require large-scale video datasets. In this paper, we introduce a new task of zero-shot text-to-video generation and propose a low-cost approach (without any training or optimization) by leveraging the power of existing text-to-image synthesis methods (e.g., Stable Diffusion), making them suitable for the video domain. Our key modifications include (i) enriching the latent codes of the generated frames with motion dynamics to keep the global scene and the background time consistent; and (ii) reprogramming frame-level self-attention using a new cross-frame attention of each frame on the first frame, to preserve the context, appearance, and identity of the foreground object. Experiments show that this leads to low overhead, yet high-quality and remarkably consistent video generation. Moreover, our approach is not limited to text-to-video synthesis but is also applicable to other tasks such as conditional and content-specialized video generation, and Video Instruct-Pix2Pix, i.e., instruction-guided video editing. As experiments show, our method performs comparably or sometimes better than recent approaches, despite not being trained on additional video data.

You can find additional information about Text2Video-Zero on the project page, paper, and original codebase.

Usage example¶

Text-To-Video¶

To generate a video from prompt, run the following Python code:

import mindspore as ms

from mindone.diffusers import TextToVideoZeroPipeline

import imageio

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

pipe = TextToVideoZeroPipeline.from_pretrained(model_id, mindspore_dtype=ms.float16)

prompt = "A panda is playing guitar on times square"

result = pipe(prompt=prompt).images

result = [(r * 255).astype("uint8") for r in result]

imageio.mimsave("video.mp4", result, fps=4)

motion_field_strength_x and motion_field_strength_y. Default: motion_field_strength_x=12, motion_field_strength_y=12

* T and T' (see the paper, Sect. 3.3.1)

* t0 and t1 in the range {0, ..., num_inference_steps}. Default: t0=45, t1=48

* Video length:

* video_length, the number of frames video_length to be generated. Default: video_length=8

We can also generate longer videos by doing the processing in a chunk-by-chunk manner:

import mindspore as ms

from mindone.diffusers import TextToVideoZeroPipeline

import numpy as np

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

pipe = TextToVideoZeroPipeline.from_pretrained(model_id, mindspore_dtype=ms.float16)

seed = 0

video_length = 24 #24 ÷ 4fps = 6 seconds

chunk_size = 8

prompt = "A panda is playing guitar on times square"

# Generate the video chunk-by-chunk

result = []

chunk_ids = np.arange(0, video_length, chunk_size - 1)

for i in range(len(chunk_ids)):

print(f"Processing chunk {i + 1} / {len(chunk_ids)}")

ch_start = chunk_ids[i]

ch_end = video_length if i == len(chunk_ids) - 1 else chunk_ids[i + 1]

# Attach the first frame for Cross Frame Attention

frame_ids = [0] + list(range(ch_start, ch_end))

# Fix the seed for the temporal consistency

output = pipe(prompt=prompt, video_length=len(frame_ids), frame_ids=frame_ids)

result.append(output.images[1:])

# Concatenate chunks and save

result = np.concatenate(result)

result = [(r * 255).astype("uint8") for r in result]

imageio.mimsave("video.mp4", result, fps=4)

Text-To-Video with Pose Control¶

To generate a video from prompt with additional pose control

-

Download a demo video

from huggingface_hub import hf_hub_download filename = "__assets__/poses_skeleton_gifs/dance1_corr.mp4" repo_id = "PAIR/Text2Video-Zero" video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename) -

Read video containing extracted pose images

To extract pose from actual video, read ControlNet documentation.from PIL import Image import imageio reader = imageio.get_reader(video_path, "ffmpeg") frame_count = 8 pose_images = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)] -

Run

StableDiffusionControlNetPipelinewith our custom attention processorimport mindspore as ms from mindone.diffusers import StableDiffusionControlNetPipeline, ControlNetModel from mindone.diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5" controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-openpose", torch_dtype=ms.float16) pipe = StableDiffusionControlNetPipeline.from_pretrained( model_id, controlnet=controlnet, torch_dtype=ms.float16 ) # Set the attention processor pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2)) pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2)) # fix latents for all frames latents = ms.ops.randn((1, 4, 64, 64), dtype=ms.float16).repeat(len(pose_images), 1, 1, 1) prompt = "Darth Vader dancing in a desert" result = pipe(prompt=[prompt] * len(pose_images), image=pose_images, latents=latents).images imageio.mimsave("video.mp4", result, fps=4)

Text-To-Video with Edge Control¶

To generate a video from prompt with additional Canny edge control, follow the same steps described above for pose-guided generation using Canny edge ControlNet model.

Video Instruct-Pix2Pix¶

To perform text-guided video editing (with InstructPix2Pix):

-

Download a demo video

from huggingface_hub import hf_hub_download filename = "__assets__/pix2pix video/camel.mp4" repo_id = "PAIR/Text2Video-Zero" video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename) -

Read video from path

from PIL import Image import imageio reader = imageio.get_reader(video_path, "ffmpeg") frame_count = 8 video = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)] -

Run

StableDiffusionInstructPix2PixPipelinewith our custom attention processorimport mindspore as ms from mindone.diffusers import StableDiffusionInstructPix2PixPipeline from mindone.diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor model_id = "timbrooks/instruct-pix2pix" pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, mindspore_dtype=ms.float16) pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=3)) prompt = "make it Van Gogh Starry Night style" result = pipe(prompt=[prompt] * len(video), image=video)[0] imageio.mimsave("edited_video.mp4", result, fps=4)

Tip

Make sure to check out the Schedulers guide to learn how to explore the tradeoff between scheduler speed and quality, and see the reuse components across pipelines section to learn how to efficiently load the same components into multiple pipelines.

mindone.diffusers.TextToVideoZeroPipeline

¶

Bases: DeprecatedPipelineMixin, DiffusionPipeline, StableDiffusionMixin, TextualInversionLoaderMixin, StableDiffusionLoraLoaderMixin, FromSingleFileMixin

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 475 476 477 478 479 480 481 482 483 484 485 486 487 488 489 490 491 492 493 494 495 496 497 498 499 500 501 502 503 504 505 506 507 508 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 531 532 533 534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 734 735 736 737 738 739 740 741 742 743 744 745 746 747 748 749 750 751 752 753 754 755 756 757 758 759 760 761 762 763 764 765 766 767 768 769 770 771 772 773 774 775 776 777 778 779 780 781 782 783 784 785 786 787 788 789 790 791 792 793 794 795 796 797 798 799 800 801 802 803 804 805 806 807 808 809 810 811 812 813 814 815 816 817 818 819 820 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 837 838 839 840 841 842 843 844 845 846 847 848 849 850 851 852 853 854 855 856 857 858 859 860 861 862 863 864 865 866 867 868 869 870 871 872 873 874 875 876 877 878 879 880 881 882 883 884 885 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 902 903 904 905 906 907 908 909 910 911 912 913 914 915 916 917 918 919 920 921 922 923 924 925 926 927 928 929 930 931 932 933 934 935 936 937 938 939 940 941 942 943 944 945 946 947 948 949 950 951 952 953 954 955 956 957 958 959 960 961 962 963 964 965 966 967 968 969 970 971 972 973 | |

mindone.diffusers.TextToVideoZeroPipeline.__call__(prompt, video_length=8, height=None, width=None, num_inference_steps=50, guidance_scale=7.5, negative_prompt=None, num_videos_per_prompt=1, eta=0.0, generator=None, latents=None, motion_field_strength_x=12, motion_field_strength_y=12, output_type='tensor', return_dict=True, callback=None, callback_steps=1, t0=44, t1=47, frame_ids=None)

¶

The call function to the pipeline for generation.

| PARAMETER | DESCRIPTION |

|---|---|

prompt

|

The prompt or prompts to guide image generation. If not defined, you need to pass

TYPE:

|

video_length

|

The number of generated video frames.

TYPE:

|

height

|

The height in pixels of the generated image.

TYPE:

|

width

|

The width in pixels of the generated image.

TYPE:

|

num_inference_steps

|

The number of denoising steps. More denoising steps usually lead to a higher quality image at the expense of slower inference.

TYPE:

|

guidance_scale

|

A higher guidance scale value encourages the model to generate images closely linked to the text

TYPE:

|

negative_prompt

|

The prompt or prompts to guide what to not include in video generation. If not defined, you need to

pass

TYPE:

|

num_videos_per_prompt

|

The number of videos to generate per prompt.

TYPE:

|

eta

|

Corresponds to parameter eta (η) from the DDIM paper. Only

applies to the [

TYPE:

|

generator

|

A random number generator.

TYPE:

|

latents

|

Pre-generated noisy latents sampled from a Gaussian distribution, to be used as inputs for video

generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

tensor is generated by sampling using the supplied random

TYPE:

|

output_type

|

The output format of the generated video. Choose between

TYPE:

|

return_dict

|

Whether or not to return a

[

TYPE:

|

callback

|

A function that calls every

TYPE:

|

callback_steps

|

The frequency at which the

TYPE:

|

motion_field_strength_x

|

Strength of motion in generated video along x-axis. See the paper, Sect. 3.3.1.

TYPE:

|

motion_field_strength_y

|

Strength of motion in generated video along y-axis. See the paper, Sect. 3.3.1.

TYPE:

|

t0

|

Timestep t0. Should be in the range [0, num_inference_steps - 1]. See the paper, Sect. 3.3.1.

TYPE:

|

t1

|

Timestep t0. Should be in the range [t0 + 1, num_inference_steps - 1]. See the paper, Sect. 3.3.1.

TYPE:

|

frame_ids

|

Indexes of the frames that are being generated. This is used when generating longer videos chunk-by-chunk.

TYPE:

|

| RETURNS | DESCRIPTION |

|---|---|

|

[ |

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

534 535 536 537 538 539 540 541 542 543 544 545 546 547 548 549 550 551 552 553 554 555 556 557 558 559 560 561 562 563 564 565 566 567 568 569 570 571 572 573 574 575 576 577 578 579 580 581 582 583 584 585 586 587 588 589 590 591 592 593 594 595 596 597 598 599 600 601 602 603 604 605 606 607 608 609 610 611 612 613 614 615 616 617 618 619 620 621 622 623 624 625 626 627 628 629 630 631 632 633 634 635 636 637 638 639 640 641 642 643 644 645 646 647 648 649 650 651 652 653 654 655 656 657 658 659 660 661 662 663 664 665 666 667 668 669 670 671 672 673 674 675 676 677 678 679 680 681 682 683 684 685 686 687 688 689 690 691 692 693 694 695 696 697 698 699 700 701 702 703 704 705 706 707 708 709 710 711 712 713 714 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 731 732 733 734 735 736 737 738 739 740 741 742 743 744 745 746 747 748 749 750 751 752 753 754 755 756 757 758 759 | |

mindone.diffusers.TextToVideoZeroPipeline.backward_loop(latents, timesteps, prompt_embeds, guidance_scale, callback, callback_steps, num_warmup_steps, extra_step_kwargs, cross_attention_kwargs=None)

¶

Perform backward process given list of time steps.

| PARAMETER | DESCRIPTION |

|---|---|

latents

|

Latents at time timesteps[0].

|

timesteps

|

Time steps along which to perform backward process.

|

prompt_embeds

|

Pre-generated text embeddings.

|

guidance_scale

|

A higher guidance scale value encourages the model to generate images closely linked to the text

|

callback

|

A function that calls every

TYPE:

|

callback_steps

|

The frequency at which the

TYPE:

|

extra_step_kwargs

|

Extra_step_kwargs.

|

cross_attention_kwargs

|

A kwargs dictionary that if specified is passed along to the [

DEFAULT:

|

num_warmup_steps

|

number of warmup steps.

|

| RETURNS | DESCRIPTION |

|---|---|

latents

|

Latents of backward process output at time timesteps[-1]. |

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 | |

mindone.diffusers.TextToVideoZeroPipeline.encode_prompt(prompt, num_images_per_prompt, do_classifier_free_guidance, negative_prompt=None, prompt_embeds=None, negative_prompt_embeds=None, lora_scale=None, clip_skip=None)

¶

Encodes the prompt into text encoder hidden states.

| PARAMETER | DESCRIPTION |

|---|---|

prompt

|

prompt to be encoded

TYPE:

|

num_images_per_prompt

|

number of images that should be generated per prompt

TYPE:

|

do_classifier_free_guidance

|

whether to use classifier free guidance or not

TYPE:

|

negative_prompt

|

The prompt or prompts not to guide the image generation. If not defined, one has to pass

TYPE:

|

prompt_embeds

|

Pre-generated text embeddings. Can be used to easily tweak text inputs, e.g. prompt weighting. If not

provided, text embeddings will be generated from

TYPE:

|

negative_prompt_embeds

|

Pre-generated negative text embeddings. Can be used to easily tweak text inputs, e.g. prompt

weighting. If not provided, negative_prompt_embeds will be generated from

TYPE:

|

lora_scale

|

A LoRA scale that will be applied to all LoRA layers of the text encoder if LoRA layers are loaded.

TYPE:

|

clip_skip

|

Number of layers to be skipped from CLIP while computing the prompt embeddings. A value of 1 means that the output of the pre-final layer will be used for computing the prompt embeddings.

TYPE:

|

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

795 796 797 798 799 800 801 802 803 804 805 806 807 808 809 810 811 812 813 814 815 816 817 818 819 820 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 837 838 839 840 841 842 843 844 845 846 847 848 849 850 851 852 853 854 855 856 857 858 859 860 861 862 863 864 865 866 867 868 869 870 871 872 873 874 875 876 877 878 879 880 881 882 883 884 885 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 902 903 904 905 906 907 908 909 910 911 912 913 914 915 916 917 918 919 920 921 922 923 924 925 926 927 928 929 930 931 932 933 934 935 936 937 938 939 940 941 942 943 944 945 946 947 948 949 950 951 952 953 954 955 956 957 958 959 960 961 962 963 964 965 | |

mindone.diffusers.TextToVideoZeroPipeline.forward_loop(x_t0, t0, t1, generator)

¶

Perform DDPM forward process from time t0 to t1. This is the same as adding noise with corresponding variance.

| PARAMETER | DESCRIPTION |

|---|---|

x_t0

|

Latent code at time t0.

|

t0

|

Timestep at t0.

|

t1

|

Timestamp at t1.

|

generator

|

A random number generator.

TYPE:

|

| RETURNS | DESCRIPTION |

|---|---|

x_t1

|

Forward process applied to x_t0 from time t0 to t1. |

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 | |

mindone.diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero.TextToVideoPipelineOutput

dataclass

¶

Bases: BaseOutput

Output class for zero-shot text-to-video pipeline.

| PARAMETER | DESCRIPTION |

|---|---|

images

|

List of denoised PIL images of length

TYPE:

|

nsfw_content_detected

|

List indicating whether the corresponding generated image contains "not-safe-for-work" (nsfw) content or

TYPE:

|

Source code in mindone/diffusers/pipelines/text_to_video_synthesis/pipeline_text_to_video_zero.py

185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 | |